The process of vulnerability disclosure can be riddled with frustrations, concerns about ethics, and communication failure. I have had tons of bugs go well. I have had tons of bugs go poorly.

I submit a lot of bugs, through both bounty programs (Bugcrowd/HackerOne) and direct reporting lines (Microsoft). I’m not here to discuss ethics. I’m not here to provide a solution to the great “vulnerability disclosure” debate. I am simply here to share one experience that really stood out to me, and I hope it causes some reflection on the reporting processes for all vendors going forward.

First, I’d like to give a little background on myself and my relationship with vulnerability research.

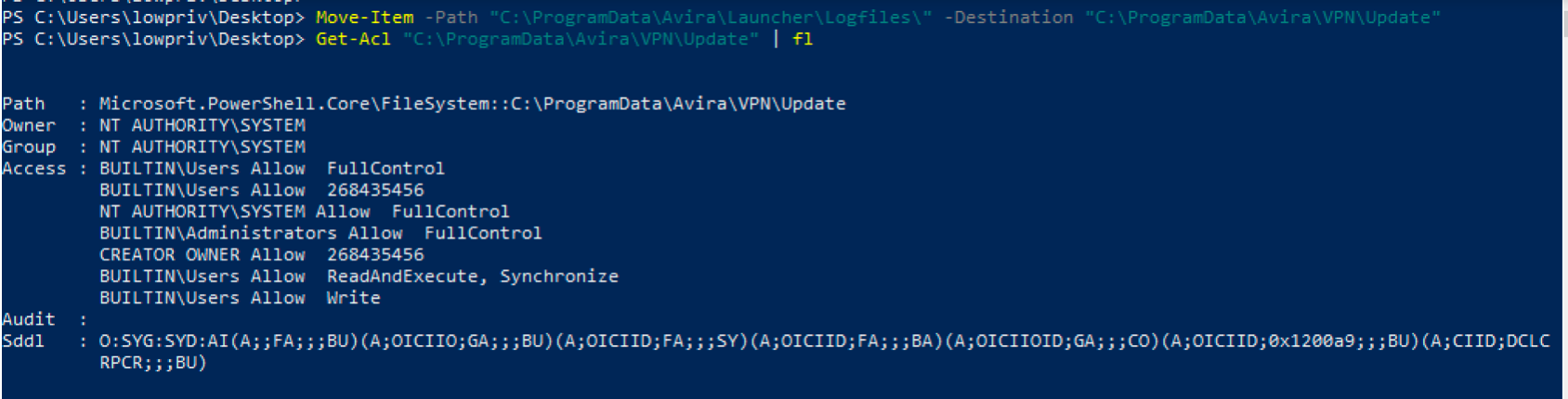

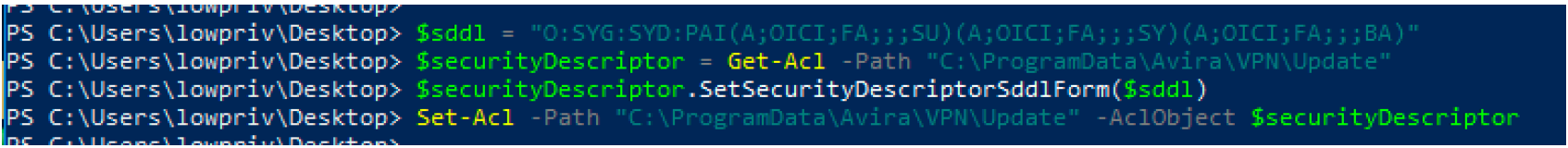

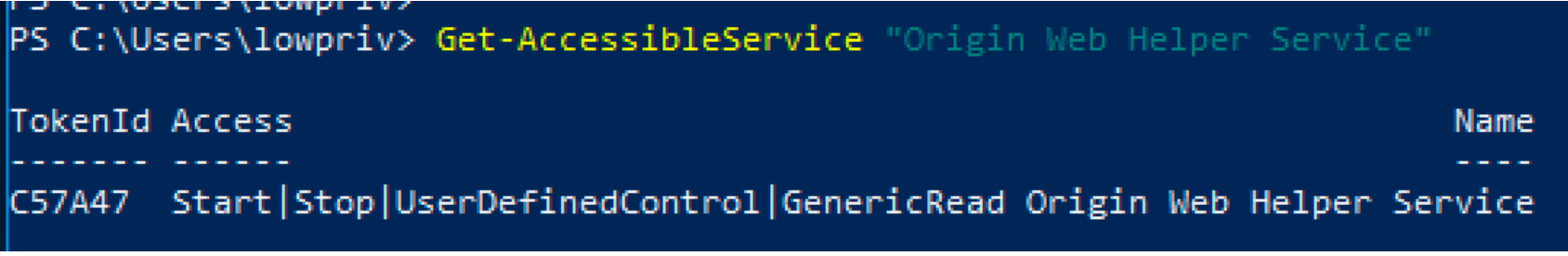

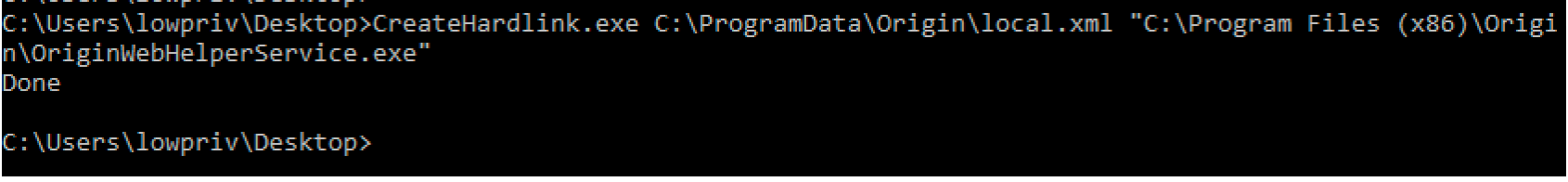

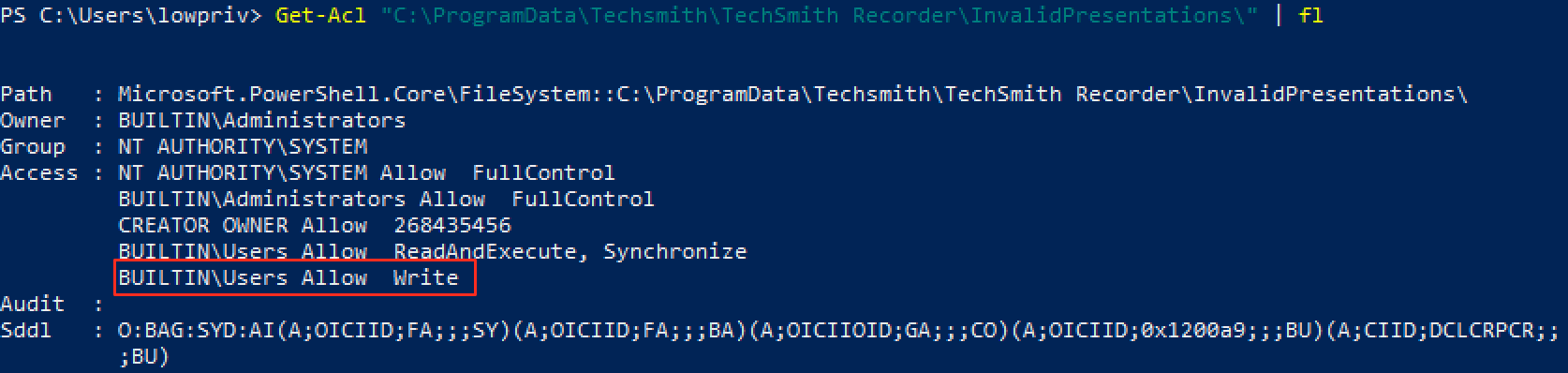

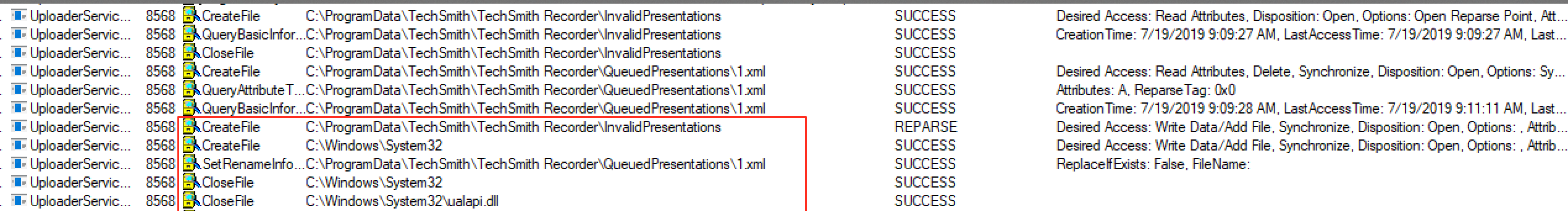

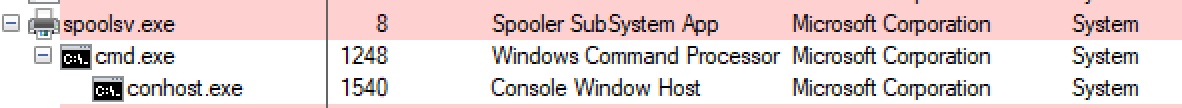

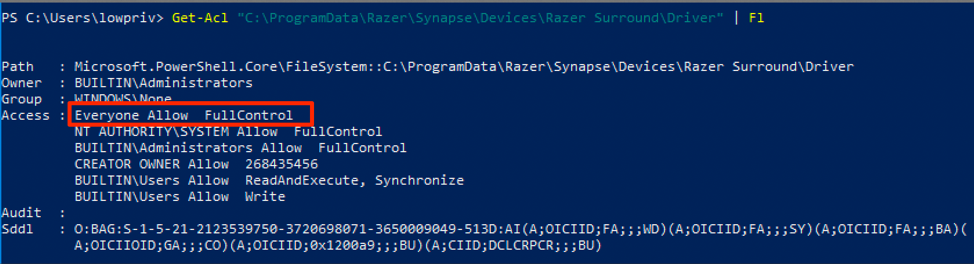

I’m not an experienced reverse engineer. I’m not a full-time developer. Do I know C/C++ well? No. I’m relatively new to the industry (3 years in). I give up my free time to do research and close my knowledge gaps. I don’t find crazy kernel memory leaks, rather, I find often overlooked user-mode logic bugs (DACL overwrite bugs, anyone?).

Most importantly, I do vulnerability research (VR) as a hobby in order to learn technical concepts I’m interested in that don’t necessarily apply directly to my day job. While limited, my experience in VR comes with the same pains that everyone else has.

When I report bugs, the process typically goes like this:

- Find bug->Disclose bug->Vendor’s eyes open widely at bug->Bug is fixed and CVE possibly issued (with relevant acknowledgement)->case closed

- Find bug->Disclose bug->Vendor fails to see the impact, issues a won’t fix->case closed

When looking at these two situations, there are various factors that can determine if your report lands on #1 or #2. Such factors can include:

- Internal vendor politics/reorg

- Case handler experience/work ethic/communication (!!!!)

- Report quality (did you explain the bug well, and outline the impact the bug has on a product?)

Factors that you can’t control can start to cause frustration when they occur repeatedly. This is where the vendor needs to be open to feedback regarding their processes, and where researchers need to be open to feedback regarding their reports.

So, let us look at a case study in a vulnerability report gone wrong (and then subsequently rectified):

On Feb 16, 2018 at 2:37 PM, I sent an email to secure@microsoft.com with a write-up and PoC for RCE in the .SettingContent-ms file format on Windows 10. Here is the original email:

This situation is a good example where researchers need to be open to feedback. Looking back on my original submission, I framed the bug mostly around Office 2016’s OLE block list and a bypass of the Attack Surface Reduction Rules in Windows Defender. I did, however, mention in the email that “The PoC zip contains the weaponized .settingcontent-ms file (which enables code-execution from the internet with no security warnings for the user)”. This is a very important line, but it was overshadowed by the rest of the email.

On Feb 16, 2018 at 4:34 PM, I received a canned response from Microsoft stating that a case number was assigned. My understanding is that this email is fairly automated when a case handler takes (or is assigned) your case:

Great. At this point, it is simply a waiting game while they triage the report. After a little bit of waiting, I received an email on March 2nd, 2018 at 12:27pm stating that they successfully reproduced the issue:

Awesome! This means that they were able to take my write-up with PoC and confirm its validity. At this very point, a lot of researchers see frustration. You take the time to find a bug, you take the time to report it, you get almost immediate responses from the vendor, and once they reproduce it, things go quiet. This is understandable since they are likely working on doing root cause analysis on the issue. This is the critical point in which it will be determined if the bug is worth fixing or not.

I will admit, I generally adhere to the 90 day policy that Google Project Zero uses. I do not work for GPZ, and I don’t get paid to find bugs (or manage multiple reports). I tend to be lenient if the communication is there. If a vendor doesn’t communicate with me, I drop a blog post the day after the 90 days window closes.

Vendors, PLEASE COMMUNICATE TO YOUR RESEARCHERS!

In this case, I did as many researchers would do once more than a month goes by without any word…I asked for an update:

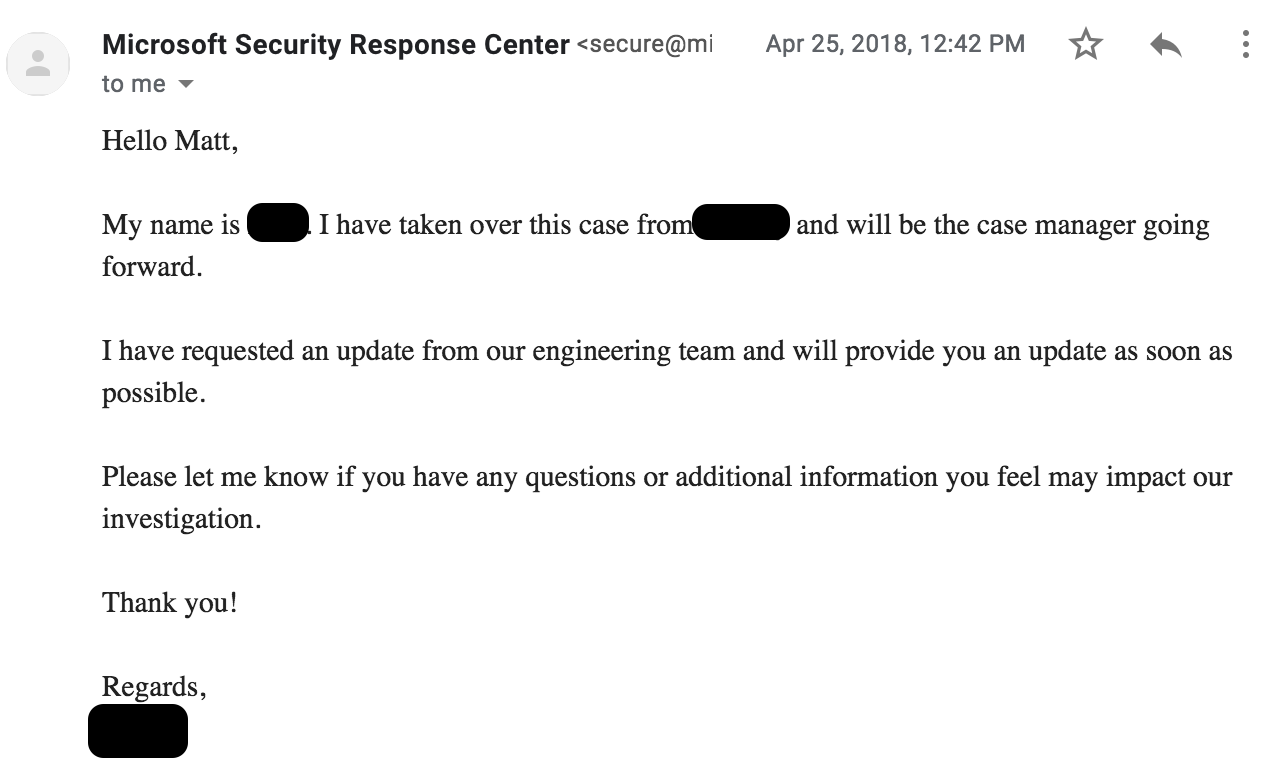

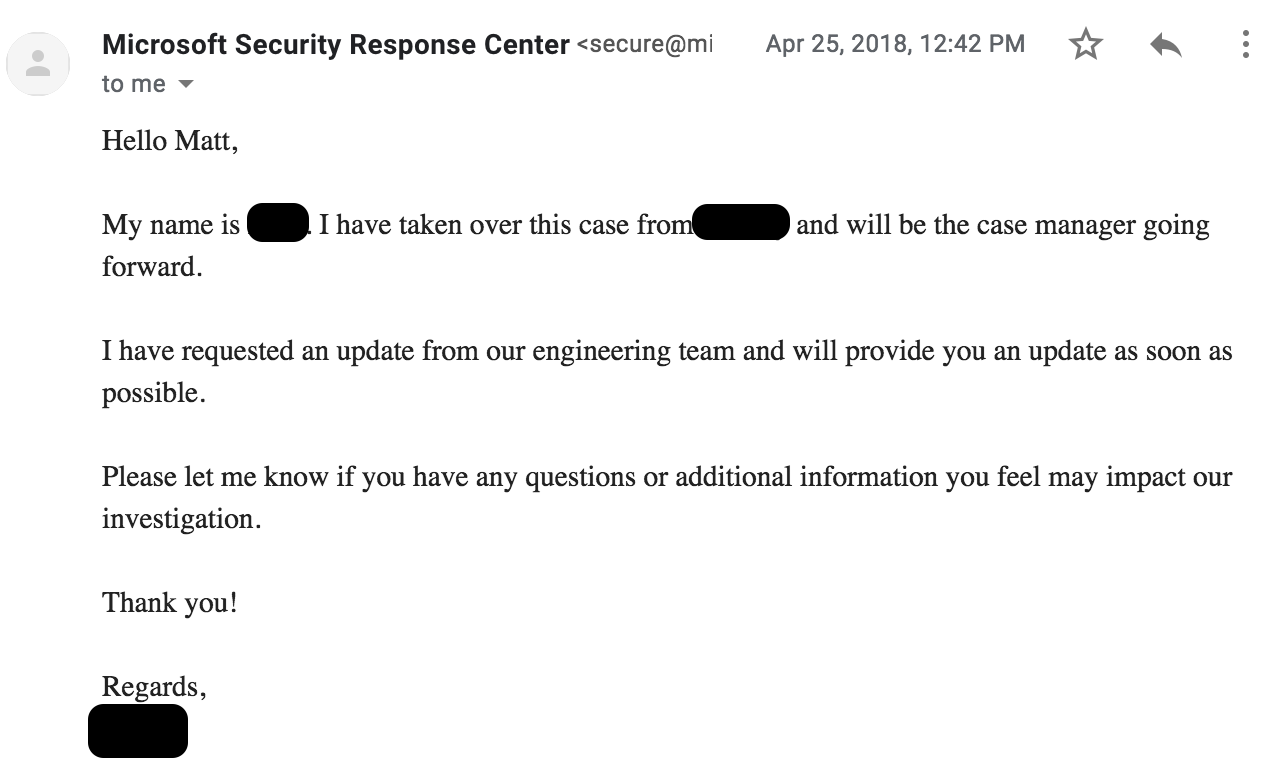

At this point, it has almost been a month and a half since I have heard anything. After asking for an update, this email comes in:

Interesting…I all of the sudden have someone else handling my case? I can understand this as Microsoft is a huge organization with various people handling the massive load of reports they get each day. Maybe my case handler got swamped?

Let’s pause and evaluate things thus far: I reported a bug. This bug was assigned a case number. I was told they reproduced the issue, then I hear nothing for a month and a half. After reaching out, I find out the case was re-assigned. Why?

Vendors, this is what causes frustration. Researchers feel like they are being dragged along and kept in the dark. Communication is key if you don’t want 0days to end up on Twitter. In reality, a lot of us sacrifice personal time to find bugs in your products. If people feel like they don’t matter or are placed on the backburner, they are less likely to report bugs to you and more likely to sell them or drop them online.

Ok, so my case was re-assigned on April 25th, 2018 at 12:42 pm. I say “Thanks!!” a few days later and let the case sit while they work the bug.

Then, a little over a month goes by with no word. At this point, it has been over 90 days since I submitted the original report. In response, I sent another follow up on June 1st, 2018 at 1:29pm:

After a few days, I get a response on June 4th, 2018 at 10:29am:

Okay. So, let’s take this from the top. On Feb 16, 2018, I reported a bug. After the typical process of opening a case and verifying the issue, I randomly get re-assigned a case handler after not hearing back for a while. Then, after waiting some time, I still don’t hear anything. So, I follow up and get a “won’t fix” response a full 111 days after the initial report.

I’ll be the first to admit that I don’t mind blogging about something once a case is closed. After all, if the vendor doesn’t care to fix it, then the world should know about it, in my opinion.

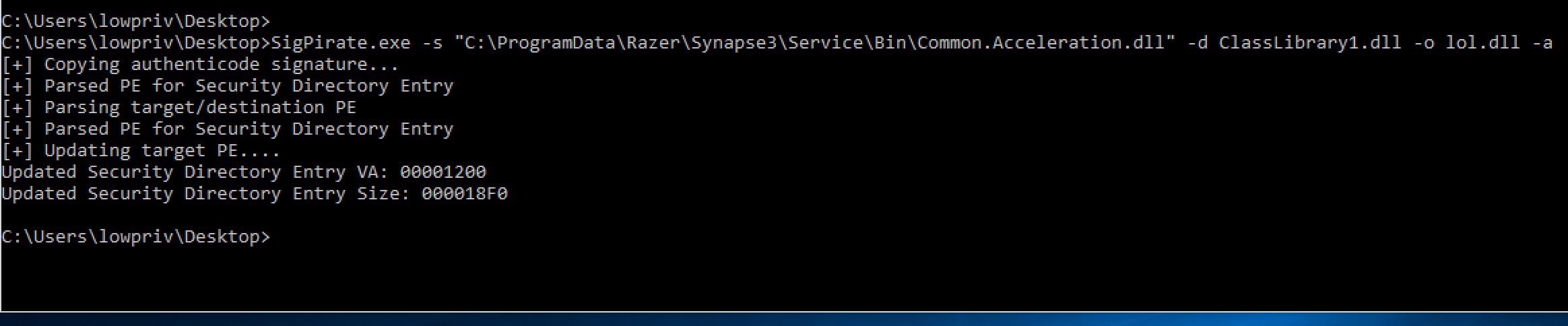

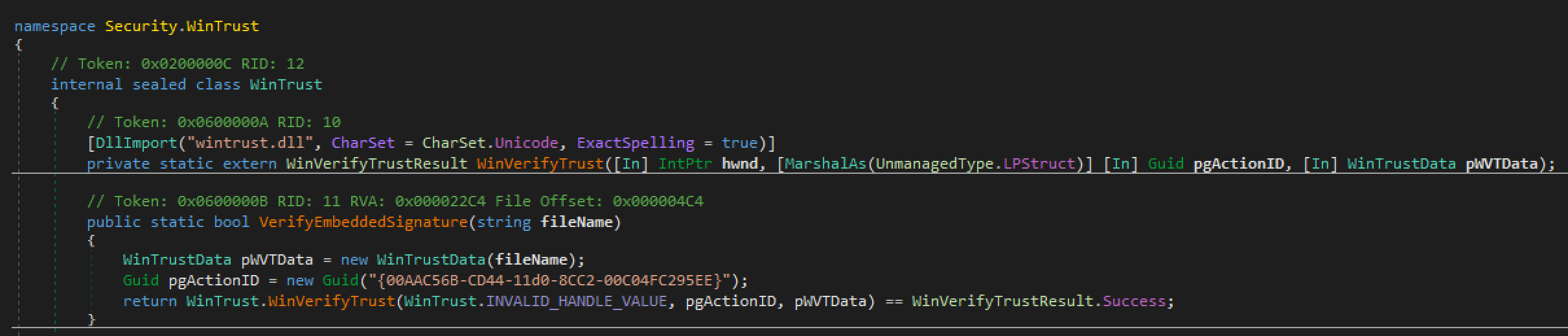

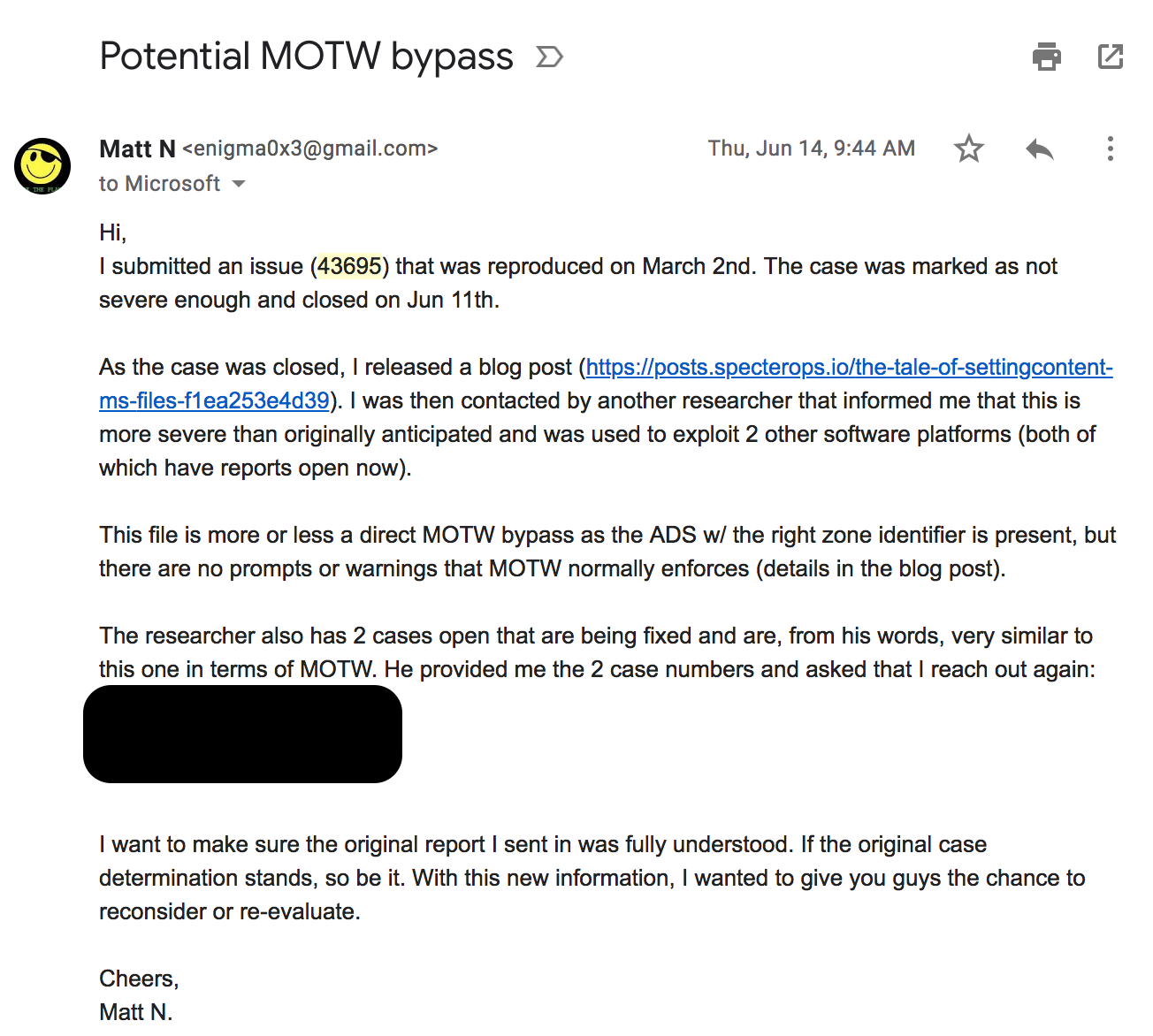

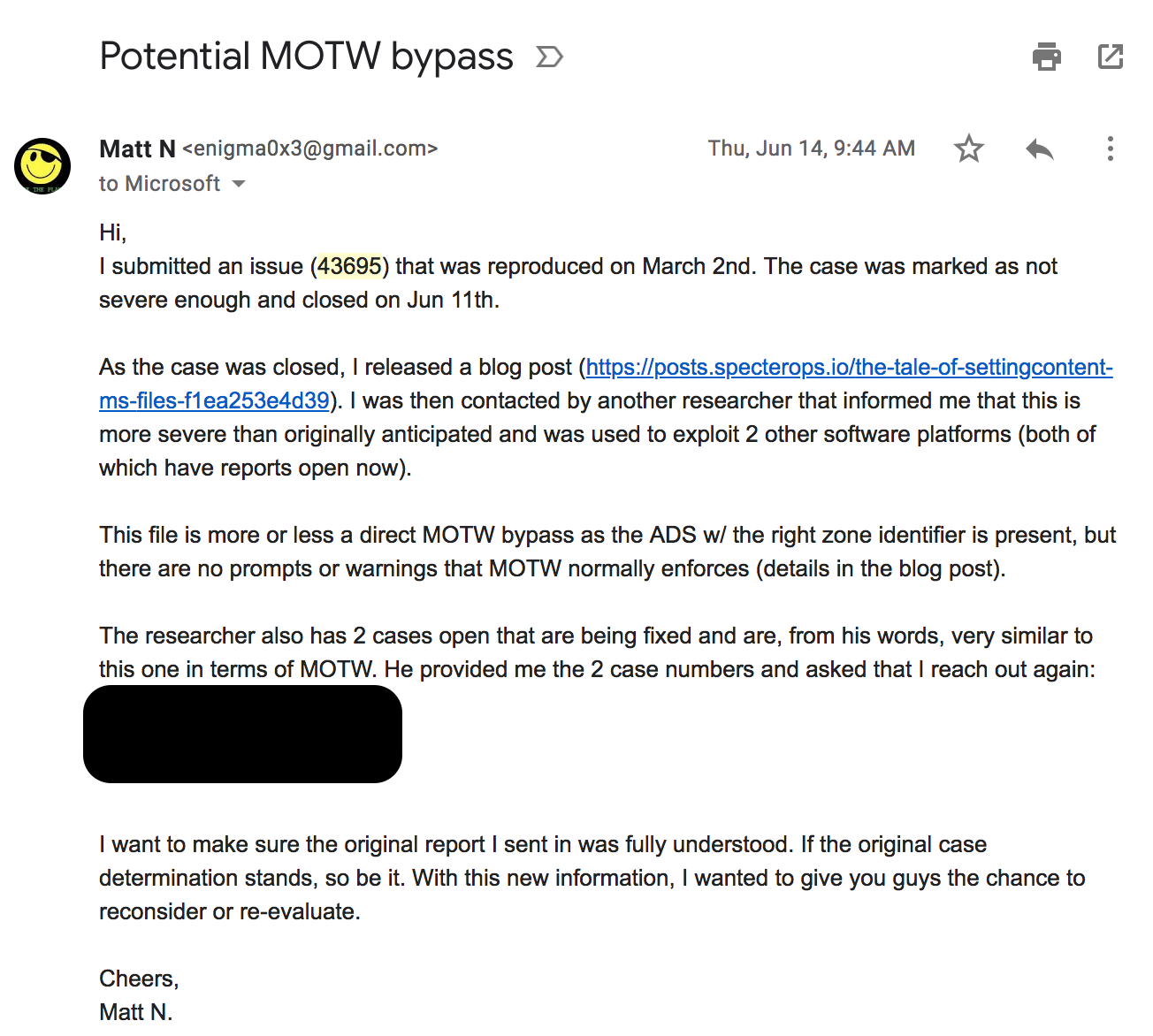

Given that response, I went ahead and blogged about it on July 11, 2018. After I dropped the post, I was contacted pretty quickly by another researcher on Twitter letting me know that my blog post resulted in 0days in Chrome and Firefox due to Mark-of-the-Web (MOTW) implications on the .SettingContent-ms file format. Given this new information, I sent a fresh email to MSRC on June 14, 2018 at 9:44am:

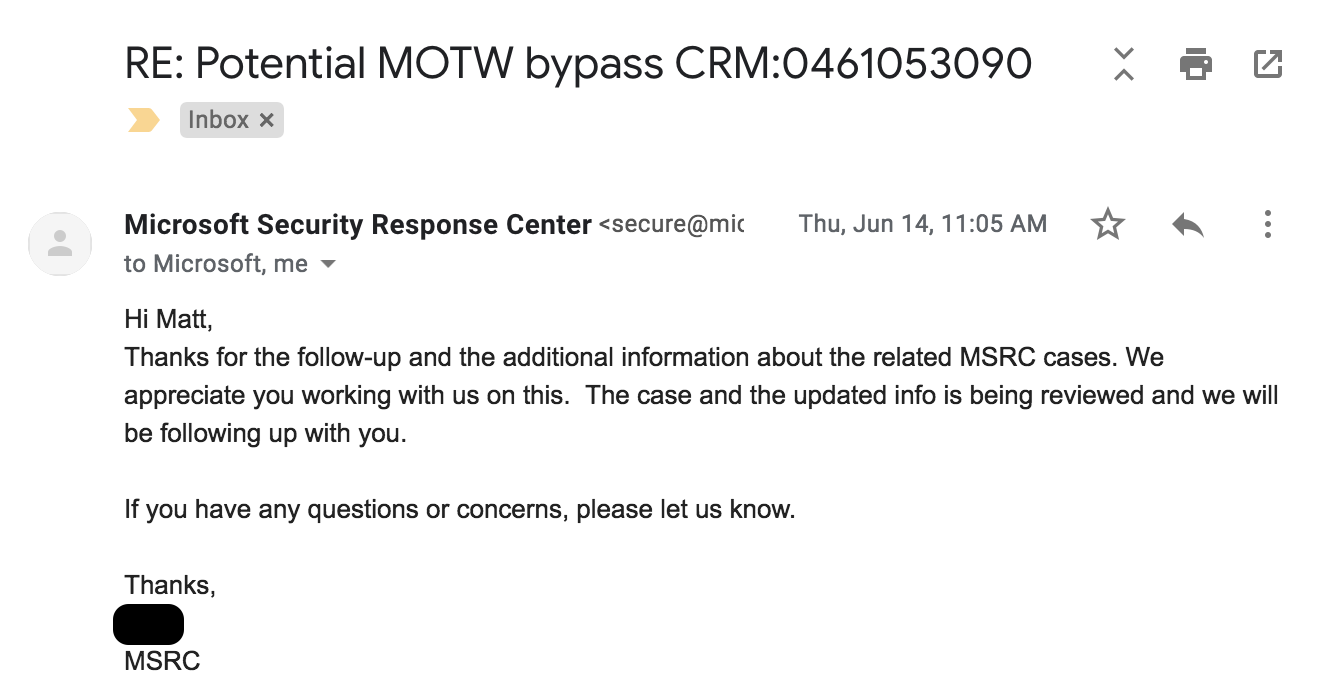

At this point, I saw two exploits impacting Google Chrome and Mozilla FireFox that utilized the .SettingContent-ms file format. After resending details, I got an email on June 14, 2018 at 11:05am, in which MSRC informed me the case would be updated:

On June 26, 2018 at 12:17pm, I sent another email to MSRC letting them know that Mozilla issued CVE-2018-12368 due to the bug:

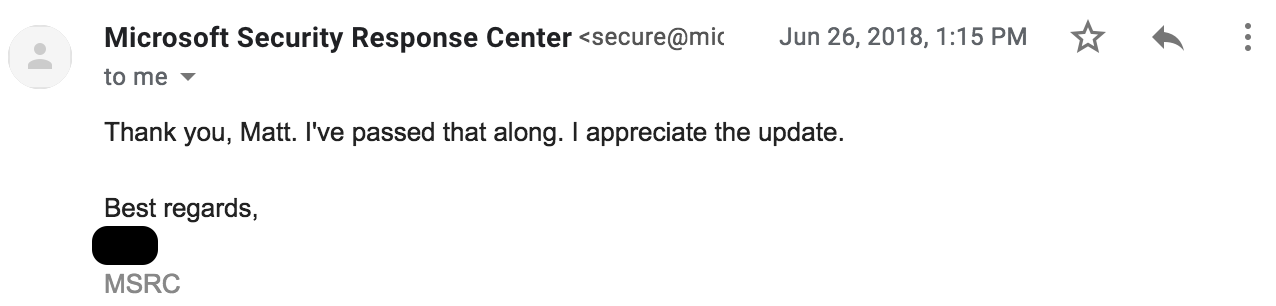

That same day, MSRC informed me that the additional details would be passed along to the team:

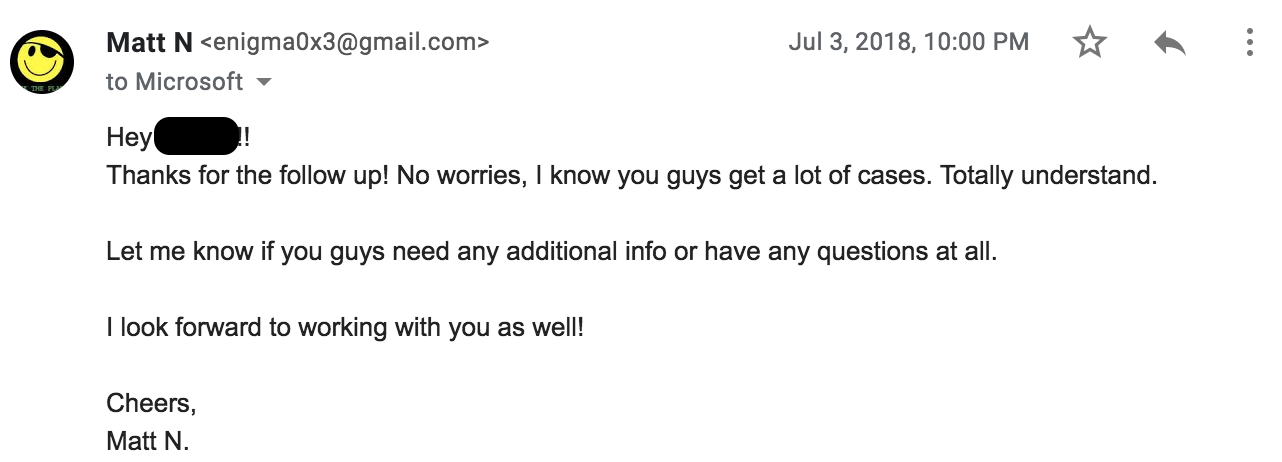

This is where things really took a turn. I received another email on July 3, 2018 at 9:52pm stating that my case had been reassigned once again, and that they are re-evaluating the case based on various other MSRC cases, the Firefox CVE, and the pending fixes to Google Chrome:

This is where sympathy can come into play. We are all just people doing jobs. While the process I went through sucked, I’m not bitter or angry about it. So, my response went like this:

After some time, I became aware that some crimeware groups were utilizing the technique in some really bad ways (https://www.proofpoint.com/us/threat-insight/post/ta505-abusing-settingcontent-ms-within-pdf-files-distribute-flawedammyy-rat). After seeing it being used in the wild, I let MSRC know:

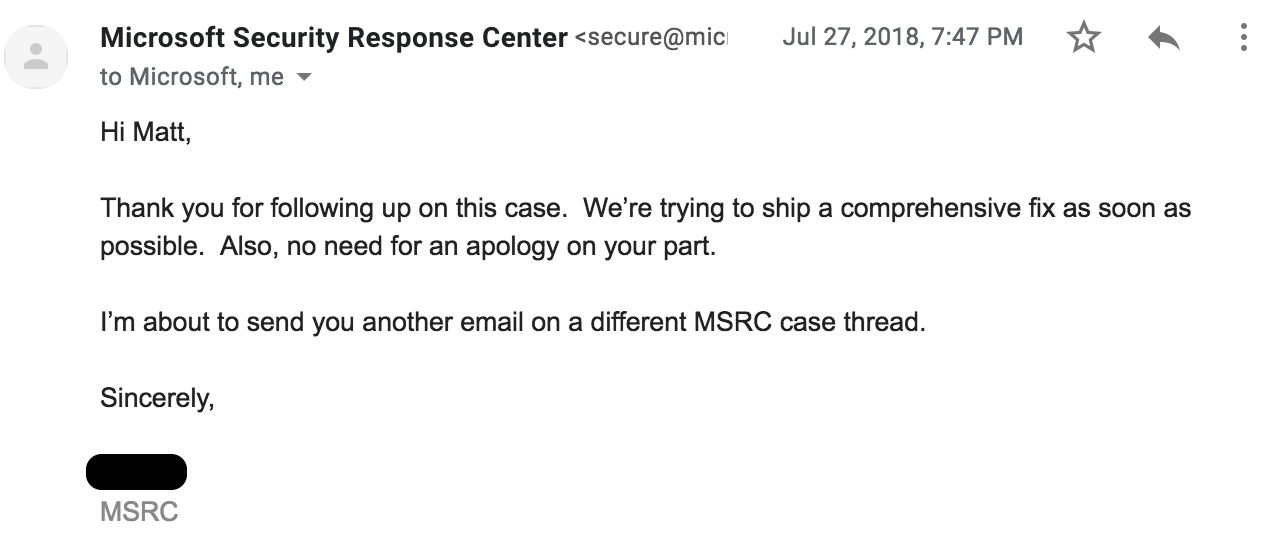

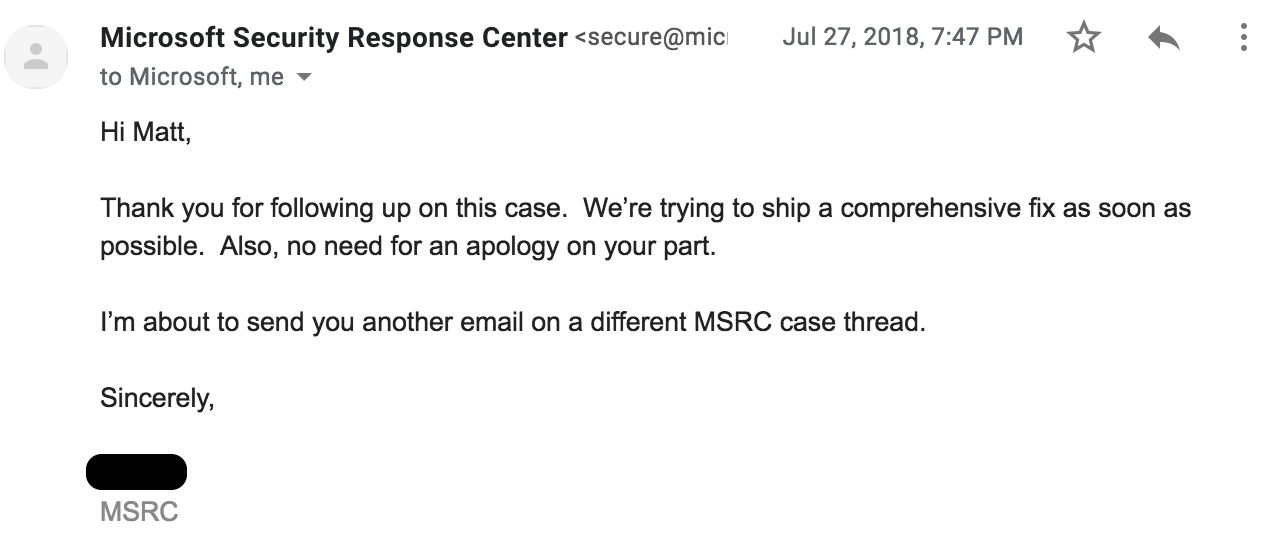

MSRC quickly let me know that they are going to ship a fix as quickly as possible…which is a complete 180 compared to the original report assessment:

Additionally, there was mention of “another email on a different MSRC case thread”. That definitely piqued my interest. A few days later, I got a strange email with a different case number than the one originally assigned:

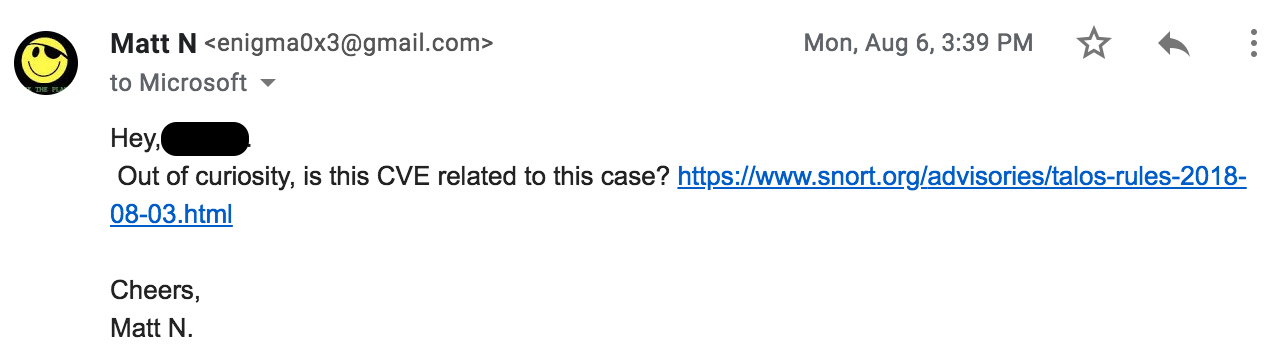

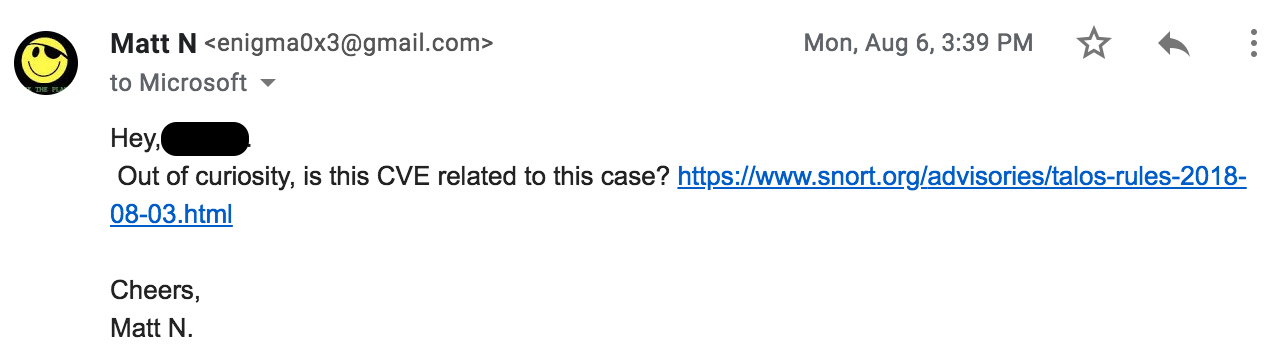

At this point, my jaw was on the floor. After sending some additional information to a closed MSRC case, the bug went from a “won’t fix” to “we are going to ship a fix as quickly as possible, and award you a bounty, too”. After some minor logistic exchanges with the Microsoft Bounty team, I saw that CVE-2018-8414 landed a spot on cve.mitre.org. This was incredibly interesting given less than a month ago, the issue was sitting as a “won’t fix”. So, I asked MSRC about it:

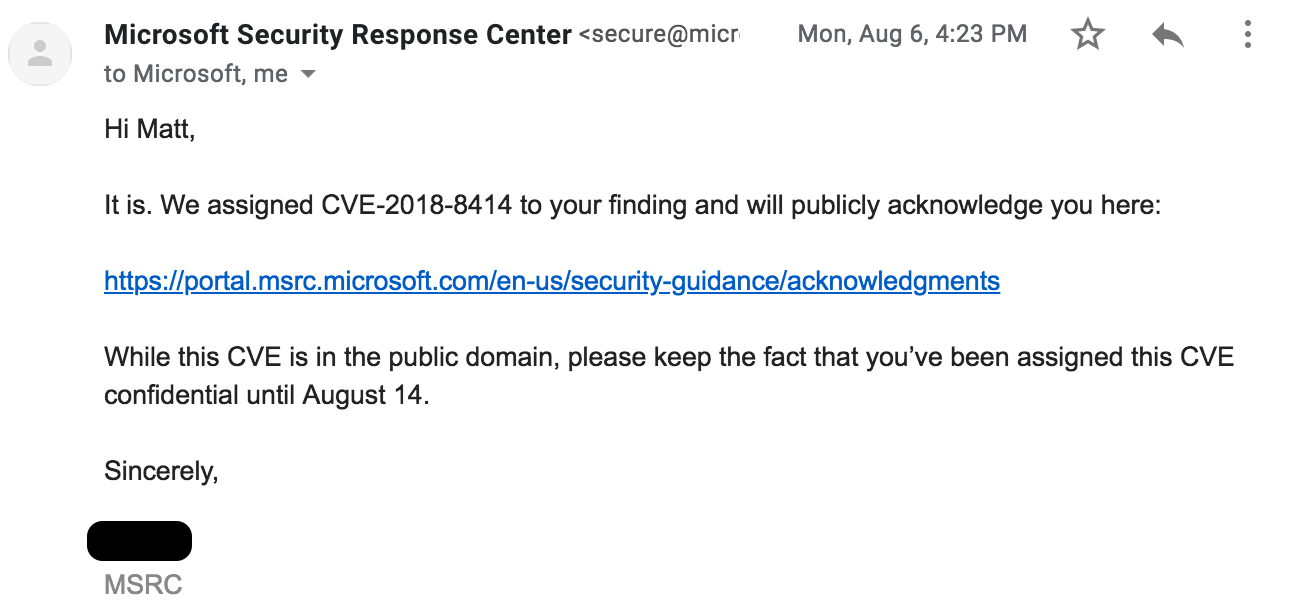

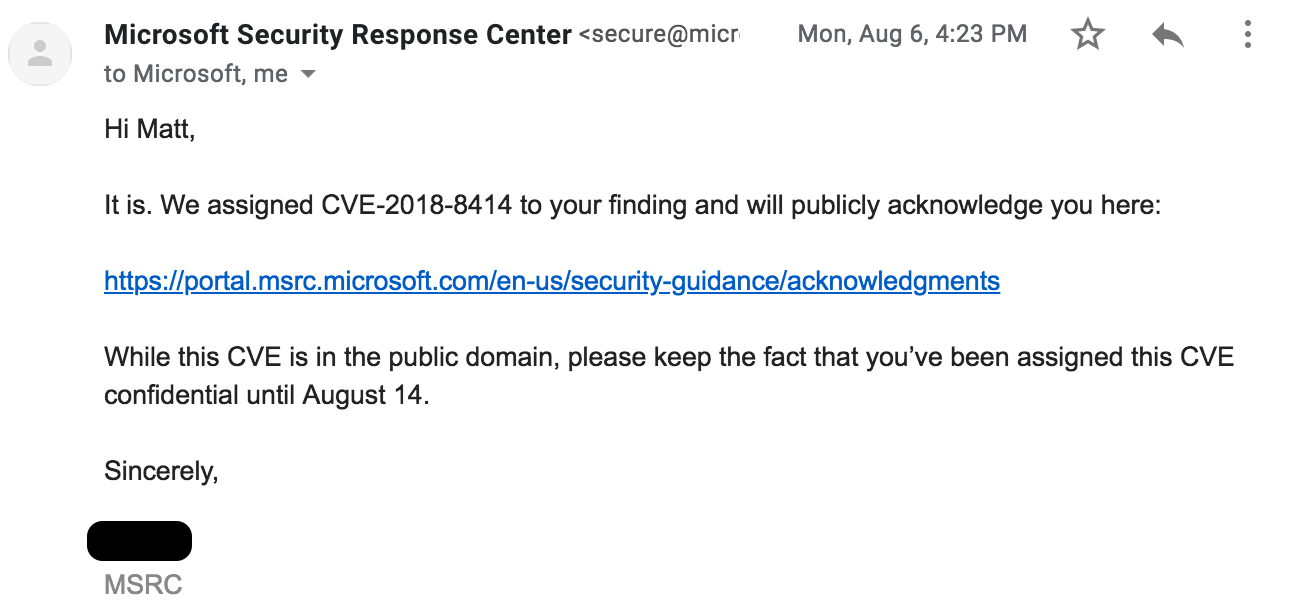

This is when I quickly found out that CVE-2018-8414 was being issued for the .SettingContent-ms RCE bug:

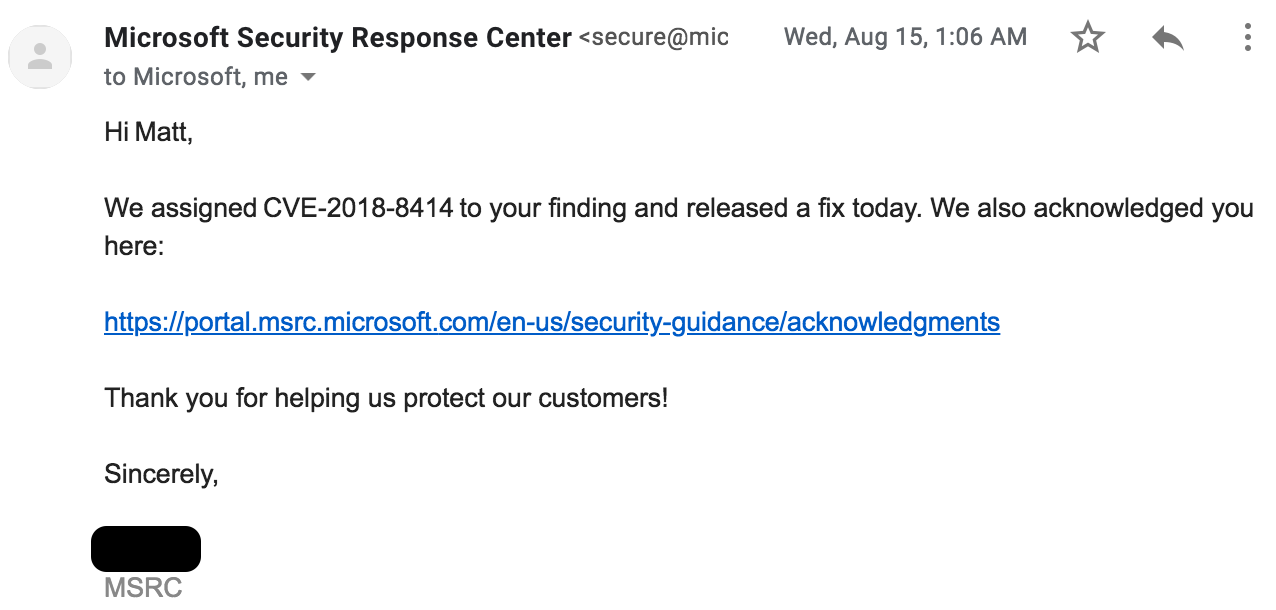

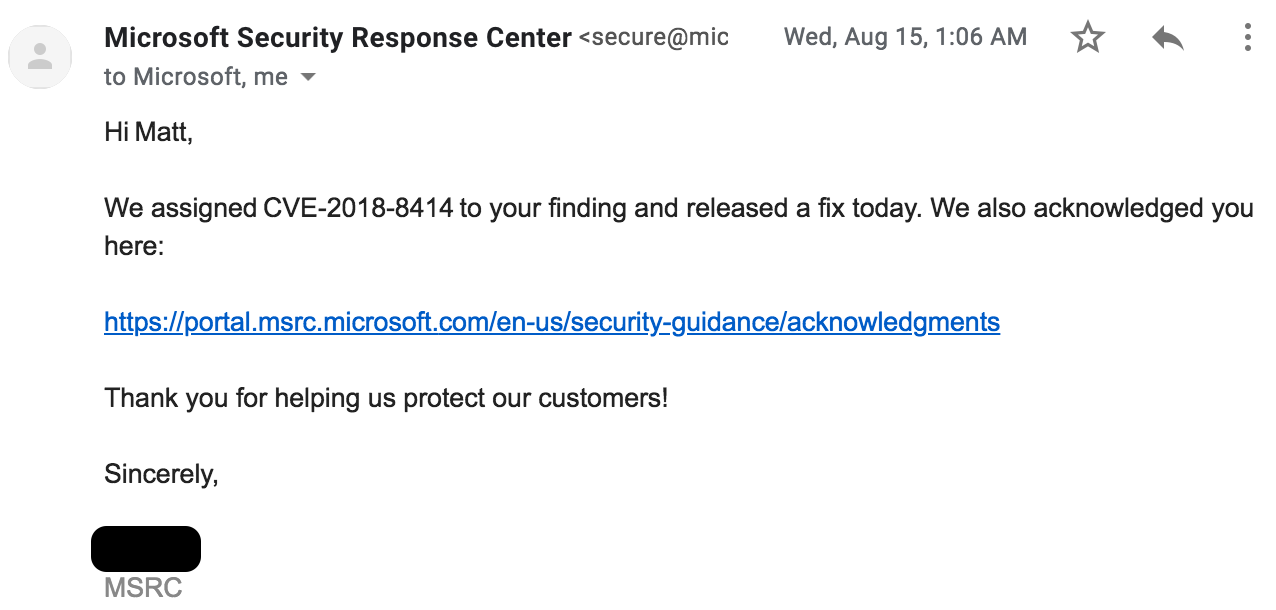

This is where the process gets cool. Previously, I disclosed a bug. That bug was given a “won’t fix” status. So, I blogged about it (https://posts.specterops.io/the-tale-of-settingcontent-ms-files-f1ea253e4d39). I then found out it had been used to exploit 2 browsers, and it was being used in the wild. Instead of letting things sit, I was proactive with MSRC and let them know about all of this. Once the August patch Tuesday came around, I received this email:

Yay!!! So Microsoft took a “Won’t Fix” bug and reassessed it based on new information I had provided once the technique was public. After a few more days and some logistical emails with Microsoft, I received this:

I have to give it to Microsoft for making things right. This bug report went from “won’t fix” to a CVE, public acknowledgement and a $15,000 bounty pretty quickly.

As someone who likes to critique myself, I can’t help but acknowledge that the original report was mostly focused on Office 2016 OLE and Windows Defender ASR, neither of which are serviceable bugs (though, RCE was mentioned). How could I have done better, and what did I learn?

If you have a bug, demonstrate the most damage it can do. I can’t place all the fault on myself, though. While I may have communicated the *context* of the bug incorrectly, MSRC’s triage and product teams should have caught the implications in the original report, especially since I mentioned “which enables code-execution from the internet with no security warnings for the user”.

This brings me to my next point. We are all human beings. I made a mistake in not clearly communicating the impact/context of my bug. MSRC made a mistake in the assessment of the bug. It happens.

Here are some key points I learned during this process:

- Vendors are people. Try to do right by them, and hopefully they try to do right by you. MSRC gave me a CVE, an acknowledgement and a $15,000 bounty for a bug which ended up being actively exploited before being fixed

- Vendors: PLEASE COMMUNICATE TO YOUR RESEARCHERS. This is the largest issue I have with vulnerability disclosure. This doesn’t just apply to Microsoft, this applies to every vendor. If you leave a researcher in the dark, without any sort of proactive response (or an actual response), your bugs will end up in the last place you want them.

- If you think your bug was misdiagnosed, see it through by following up and stating your case. Can any additional information be provided that might be useful? If you get a bug that is issued a “won’t fix”, and then you see it being exploited left and right, let the vendor know. This information could change the game for both you and their customers.

Vulnerability disclosure is, and will continue to be, a hard problem. Why? Because there are vendors out there that will not do right by their researchers. I am sitting on 0days in products due to a hostile relationship with “VendorX” (not Microsoft, to be clear). I also send literally anything I think might remotely resemble a bug to other vendors, because they do right by me.

At the end of the day, treat people the way you would like to be treated. This applied to both the vendors and the researchers. We are all in this to make things better. Stop adding roadblocks.

Timeline:

Feb 16, 2018 at 2:37 PM EDT: Report submitted to secure@microsoft.com

Feb 16, 2018 at 4:34 PM EDT: MSRC acknowledged the report and opened a case

March 2, 2018 at 12:27 PM EDT: MSRC responded noting they could reproduce the issue

April 24, 2018 at 4:06 PM EDT: Requested an update on the case

April 25, 2018 at 12:42 PM EDT: Case was reassigned to another case handler.

June 1, 2018 at 1:29 PM EDT: Asked new case handler for a case update

June 4, 2018 at 10:29 AM EDT: Informed the issue was below the bar for servicing; case closed.

July 11, 2018: Issue is publicly disclosed via a blog post

June 14, 2018 at 9:44 AM EDT: Sent MSRC a follow up after hearing of 2 browser bugs using the bug

June 14, 2018 at 11:05 AM EDT: Case was updated with new information

June 26, 2018 at 12:17 PM EDT: informed MSRC of mozilla CVE (CVE-2018-12368)

June 26, 2018 at 1:15 PM EDT: MSRC passed the mozilla CVE to the product team

July 3, 2018 at 9:52 PM EDT: Case was reassigned to another case handler

Jul 23, 2018 at 4:49 PM EDT: Let MSRC know .settingcontent-ms was being abused in the wild.

Jul 27, 2018 at 7:47 PM EDT: MSRC informed me they are shipping a fix ASAP

Jul 27, 2018 at 7:55 PM EDT: MSRC informed me of bounty qualification

Aug 6, 2018 at 3:39 PM EDT: Asked MSRC if CVE-2018-8414 was related to the case

Aug 6, 2018 at 4:23 PM EDT: MSRC confirmed CVE-2018-8414 was assigned to the case

Aug 14, 2018: Patch pushed out to the public

Sept 28, 2018 at 4:36 PM EDT: $15,000 bounty awarded

Before publishing this blog post, I asked MSRC to review it and offer any comments they may have. They asked that I include on official response statement from them, which you can find below:

Cheers,

Matt N.